|

ConvNet

1.0

A GPU-based C++ implementation of Convolutional Neural Nets

|

|

ConvNet

1.0

A GPU-based C++ implementation of Convolutional Neural Nets

|

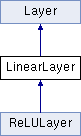

Implements a layer with a linear activation function. More...

#include <layer.h>

Public Member Functions | |

| LinearLayer (const config::Layer &config) | |

| virtual void | AllocateMemory (int imgsize, int batch_size) |

| Allocate memory for storing the state and derivative at this layer. More... | |

| virtual void | ApplyActivation (bool train) |

| Apply the activation function. More... | |

| virtual void | ApplyDerivativeOfActivation () |

| Apply the derivative of the activation. More... | |

| virtual void | ComputeDeriv () |

| Compute derivative of loss function. More... | |

| virtual float | GetLoss () |

| Compute the value of the loss function that is displayed during training. More... | |

Public Member Functions inherited from Layer Public Member Functions inherited from Layer | |

| Layer (const config::Layer &config) | |

| Instantiate a layer from config. More... | |

| virtual float | GetLoss2 () |

| Compute the value of the actual loss function. More... | |

| void | ApplyDropout (bool train) |

| Apply dropout to this layer. More... | |

| void | ApplyDerivativeofDropout () |

| Apply derivative of dropout. More... | |

| void | AccessStateBegin () |

| void | AccessStateEnd () |

| void | AccessDerivBegin () |

| void | AccessDerivEnd () |

| Edge * | GetIncomingEdge (int index) |

| Returns the incoming edge by index. More... | |

| Matrix & | GetState () |

| Returns a reference to the state of the layer. More... | |

| Matrix & | GetDeriv () |

| Returns a reference to the deriv at this layer. More... | |

| Matrix & | GetData () |

| Returns a reference to the data at this layer. More... | |

| void | Display () |

| void | Display (int image_id) |

| void | AddIncoming (Edge *e) |

| Add an incoming edge to this layer. More... | |

| void | AddOutgoing (Edge *e) |

| Add an outgoing edge from this layer. More... | |

| const string & | GetName () const |

| int | GetNumChannels () const |

| int | GetSize () const |

| bool | IsInput () const |

| bool | IsOutput () const |

| int | GetGPUId () const |

| void | AllocateMemoryOnOtherGPUs () |

| Matrix & | GetOtherState (int gpu_id) |

| Matrix & | GetOtherDeriv (int gpu_id) |

| void | SyncIncomingState () |

| void | SyncOutgoingState () |

| void | SyncIncomingDeriv () |

| void | SyncOutgoingDeriv () |

Additional Inherited Members | |

Static Public Member Functions inherited from Layer Static Public Member Functions inherited from Layer | |

| static Layer * | ChooseLayerClass (const config::Layer &layer_config) |

Public Attributes inherited from Layer Public Attributes inherited from Layer | |

| vector< Edge * > | incoming_edge_ |

| vector< Edge * > | outgoing_edge_ |

| bool | has_incoming_from_same_gpu_ |

| bool | has_outgoing_to_same_gpu_ |

| bool | has_incoming_from_other_gpus_ |

| bool | has_outgoing_to_other_gpus_ |

Protected Member Functions inherited from Layer Protected Member Functions inherited from Layer | |

| void | ApplyDropoutAtTrainTime () |

| void | ApplyDropoutAtTestTime () |

Protected Attributes inherited from Layer Protected Attributes inherited from Layer | |

| const string | name_ |

| const int | num_channels_ |

| const bool | is_input_ |

| const bool | is_output_ |

| const float | dropprob_ |

| const bool | display_ |

| const bool | dropout_scale_up_at_train_time_ |

| const bool | gaussian_dropout_ |

| const float | max_act_gaussian_dropout_ |

| int | scale_targets_ |

| int | image_size_ |

| Matrix | state_ |

| Matrix | deriv_ |

| State (activation) of the layer. More... | |

| Matrix | data_ |

| Deriv of the loss function w.r.t. More... | |

| Matrix | rand_gaussian_ |

| Data (targets) associated with this layer. More... | |

| map< int, Matrix > | other_states_ |

| Need to store random variates when doing gaussian dropout. More... | |

| map< int, Matrix > | other_derivs_ |

| Copies of this layer's state on other gpus. More... | |

| ImageDisplayer * | img_display_ |

| Copies of this layer's deriv on other gpus. More... | |

| const int | gpu_id_ |

| set< int > | other_incoming_gpu_ids_ |

| set< int > | other_outgoing_gpu_ids_ |

Implements a layer with a linear activation function.

|

virtual |

Allocate memory for storing the state and derivative at this layer.

| imgsize | The spatial size of the layer (width and height). |

| batch_size | The mini-batch size. |

Reimplemented from Layer.

|

virtual |

|

virtual |

Apply the derivative of the activation.

Derived classes must implement this. Computes the derivative w.r.t the inputs to this layer from the derivative w.r.t the outputs of this layer. Applies the derivative of the activation function to deriv_ and overwrites it.

Implements Layer.

Reimplemented in ReLULayer.

|

virtual |

Compute derivative of loss function.

This is applicable only if this layer is an output layer.

Implements Layer.

|

virtual |

Compute the value of the loss function that is displayed during training.

This is applicable only if this layer is an output layer.

Implements Layer.

1.8.7

1.8.7